Developing and implementing testing for Wi-Fi shares many of the same challenges you would expect in testing any wireless or RF technology. With the new TR-398 Issue 2 test plan, a strong focus has been on creating a repeatable set of test procedures, setup, and configurations. It’s been necessary to ensure the testing can produce repeatable pass or fail results from absolute performance requirements.

This focus sets the TR-398 testing apart from much of the other widely available Wi-Fi testing that’s focused on interoperability or functionality of specific features or components of the IEEE Wi-Fi specifications. Combining these two categories of testing—functionality and interoperability—with TR-398 performance testing creates a reliable process that manufacturers and service providers can use to deliver truly carrier-grade Wi-Fi to their subscribers.

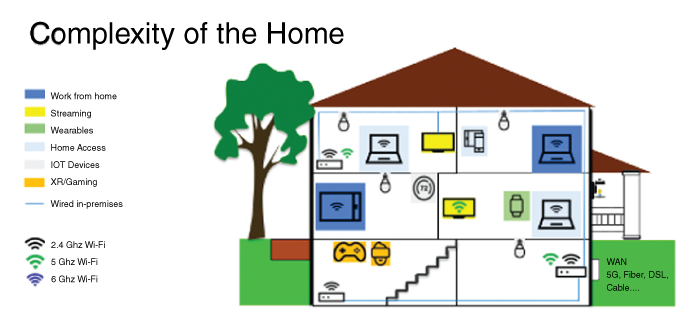

Service providers have faced a seemingly endless task of keeping up with growing application bandwidth along with the simultaneous expansion of device count. In Wi-Fi, where the physical layer is a shared and scarce resource, these two parameters are directly at odds with each other. The problem compounds as subscribers view end-application performance as a measure of the overall internet performance observed on mobile or small devices with limited space available for antennas.

To help provide the service providers with the most applicable data on the expected performance of their Wi-Fi devices in the field, the focus and design of TR-398 test cases has been on performance as would be observed by an end user. For example, testing defines performance requirements for cases using two spatial streams instead of four spatial streams, or 20-MHz bandwidth configurations for 2.4 GHz instead of 40 MHz.

Testing Real-World Scenarios

As such, the overall test coverage of TR-398 Issue 2 has focused on performance testing of real deployment scenarios for how Wi-Fi is used by broadband subscribers (Fig. 1). More specifically, the testing could be broken down into a few key areas: coverage, capacity, and robustness