Power over Ethernet (PoE) has become the colloquial term used to describe any technology that allows an Ethernet device to transmit and receive data, as well as receive power over the same cabling. The benefits of using the same cable for both data and power are numerous. It allows power to be delivered to small devices without having an electrician wire new circuits or a transformer to convert ac to dc—also referred to as a wall wart. It can also reduce the weight and cost of deployments, and when using standardized technology, it ensures a high level of safety.

However, when it comes to how PoE works from a user’s standpoint, the general term “PoE” could actually represent any number of different, incompatible technologies, which has led to considerable market confusion. To help overcome this turmoil, the Ethernet Alliance, an industry forum that aims to advance and promote IEEE Ethernet standards, is launching a certification program that will enable manufacturers to complete certification requirements to earn a branded logo. The goal is to provide a simple and easy way for users to identify what PoE products will work with each other and promote interoperability in the marketplace.

The Past

Many different implementations of PoE have been used over the past 20 years. Generally speaking, the different forms of PoE can be placed into two categories: standardized and proprietary. To help put this into context, here’s a brief history of the technology itself and how it evolved.

The advent of PoE was most commonly referred to as “power injection.” These “power injectors” operated simply by providing power, either ac or dc current, on Ethernet cabling without any true intelligent protocol or safety considerations.

There are many variants of power injectors, but probably the most common involved using the “spare” pairs not utilized by 100Base-TX Ethernet to inject the power. This method can damage or destroy devices that weren’t designed to accept power, and as such, couldn’t be considered a sustainable method of delivering power over data-communications cabling. The next natural step for the IEEE 802.3 Working Group, which defines and revises the standard commonly referred to as “Ethernet,” was to begin work on an inherently safe way to deploy PoE.

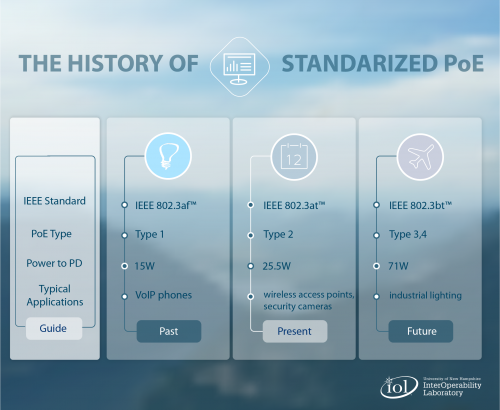

The IEEE 802.3 Working Group began its first PoE project in 1999: IEEE 802.3af, under the cumbersome title “Clause 33. Data Terminal Equipment (DTE) Power via Media Dependent Interface (MDI),” was eventually ratified in 2003. It defined Power Sourcing Equipment (PSE) that delivered power, and Powered Devices (PDs) that received power.

The 802.3af standard allowed for delivery of up to 13 W of power to a PD utilizing just two of the available four twisted pairs in Ethernet cabling. It also provided additional freedom in which you could physically place a PD, as designed, to support the entire 100-meter reach of the most common Ethernet speeds. While this may not seem like a lot of power, it was adequate for many applications, including Voice over Internet Protocol (VoIP) telephones, stationary IP cameras, door access control units, low-power wireless access points, and many others.

PoE Powers Up to 25.5 W

Despite the popularity of the standard, many devices require more than 13 W for their intended application. The natural evolution was to allow more power in order to unleash these additional PoE applications. The IEEE 802.3 Working Group ratified its second PoE standard, IEEE 802.3at, in 2009. While still only delivering power over two pairs, it altered the original standard by adding an additional power class that delivered up to 25.5 W to a PD. This allowed for pan and tilt cameras and other devices with similar power requirements to take advantage of a standardized technology.