Continuous Integration (CI) is a way for developers to test their code for every change made without needing to manually run testing on their own hardware. CI automates creating the test environment and running compile tests, unit tests, functional tests, and performance tests.

Today, there are plenty of tools to implement a CI for a project, such as Jenkins, GitLab CI, and Bamboo. Public CI is a great asset to open source projects, but it can be a security risk because it allows remote code execution by design. By default, most public CI run testing in either a virtual machine (VM) or a Container. VMs and Containers are great in a public CI, because they can be spun up and thrown away very quickly. However, this can be problematic for some types of testing. For example, performance testing on specific hardware cannot easily be done within a VM or Container, even with passthrough. If the systems don’t require performance results, specific hardware, or bare-metal configuration (i.e. BIOS changes), using a VM with hardware passthrough may be a simpler approach to securely test the submitted code or patches.

The classical solution is to set up a dedicated node with everything set up to run testing for it; aka running on “bare metal.” In a default configuration, it’s vulnerable to unintended persistence. For example, tests that install drivers into the kernel or its application software onto the system.

There are a few ways of hardening a vulnerable bare metal system. Similar to a VM, an image could be created during a clean state of the system, and restored when needed. A system like FOG could be used. This approach is time consuming. Since imaging and restoring can take a long time, there would be longer periods of CI downtime between each job.

Overlayfs

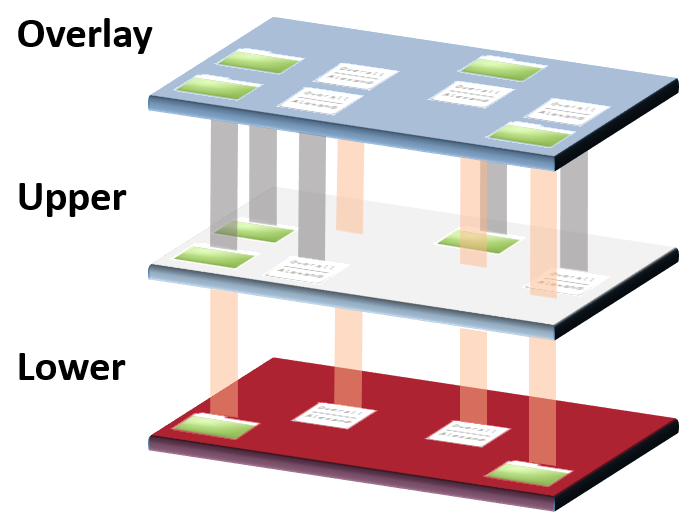

OverlayFS is another approach to solving this problem. OverlayFS is similar to running a LiveCD for a Linux distribution installation. OverlayFS allows for transparent read-write operations, but the server itself is read-only. This is done by remounting the root filesystem as read-only, called the “lower” file system, and creating a read-write tmpfs called the “upper” file system. The two mounts are combined to become the “overlay.” Similar to a LiveCD, rebooting the server goes back to its original state because changes in the tmpfs upper are discarded. The server could be rebooted after each test or periodically. OverlayFS itself is even used in some LiveCD installers. This is great to use on our bare metal machines since rebooting the node always returns it back to its original working state with minimal downtime.

Our CI nodes run Ubuntu 18.04. The “overlayroot” package is used to quickly set up this environment. It is part of Ubuntu’s “cloud-initramfs-tools.”

A read-only NFS mount is used to share scripts common between all systems, as the scripts get updated often. We ran into some errors trying to mount the file system while running in the overlay. This was quickly fixed by adding the “recurse=0” option to the configuration file. With this option set, only the initial mount of “/” is affected by the overlay. The systems also utilized “sssd” for shared login between all the systems, but that also resulted in errors while running in the overlay. We decided to disable sssd on the systems and create accounts as needed.

Jenkins

The next step is to ensure that Jenkins only runs on a system that is running on an overlayfs enabled system. The Slave Setup Plugin was used to solve this issue. With this plugin, a script can be run to do anything before starting the agent on the node. If a non-zero exit status is returned, the agent will not connect to the node. Since rebooting the node breaks the agent’s connection, the script is always ran after a reboot.

Below is a script that can be used to check if a system is running in an overlay:

#!/bin/bash # Return 0 if read-only 1 if read-write. # This only applies to systems that use overlayroot. # This will always return 0 for systems that do not use overlayroot. cmdline=$(ssh "$1" cat /proc/cmdline) # if "overlay" is in the cmdline, then that means that something like # "overlayroot=disabled" is in the cmdline, which means we are in rw if [[ $cmdline =~ "overlay" ]]; then echo "Overlay is disabled" exit 1 fi echo "Overlay is enabled (or overlay is not set up)"

This is a basic and somewhat naive script. This utilizes the temporary way of adding a kernel parameter to disable the overlay when using overlayroot. Depending on how confident the set up of the system is, it may be better to check mount points instead of the cmdline. This script is good when starting to set up some systems with overlayfs (using overlayroot) and some without. The scripts mentioned below also utilize this kernel parameter.

Rebooting

The last step is maintaining the nodes to perform system updates and etcetera. This means that we need to reboot the systems without the overlay enabled. We also need to reboot the systems as often as needed to remove any unintended persistence created by the CI. Since server hardware and firmware tend to have several minute long post times, we will also utilize kexec to be able to quickly reboot the operating system instead of the whole server.

Below is a script used to reboot the system with the overlay disabled:

#!/usr/bin/python3

# Reboot the system to read-write. This assumes the system is on an overlayfs

# enabled system.

from subprocess import check_output, check_call

kernel = check_output(['uname', '-r']).rstrip().decode('utf-8')

with open('/proc/cmdline') as f:

cmdline = f.read().rstrip()

# set next boot cmdline to disable the overlay

check_call(['sudo', 'kexec', '-l', f'/boot/vmlinuz-{kernel}',

'--initrd', f'/boot/initrd.img-{kernel}',

'--command-line', f'"{cmdline} overlayroot=disabled"'])

check_call(['sudo', 'systemctl', 'kexec'])

In this state, maintenance, such as OS updates, can be made. Since the cmdline now contains “overlayroot=disabled”, the script Jenkins uses from before will not run any jobs.

When maintenance is done, the below script can be used to reboot back into the overlay:

#!/usr/bin/python3

# Reboot the system to read-only. This assumes the system was already in

# read-write on an overlayfs enabled system.

import re

from subprocess import check_output, check_call

kernel = check_output(['uname', '-r']).rstrip().decode('utf-8')

cmdline = check_output(['cat', '/proc/cmdline']).rstrip().decode('utf-8')

# remove overlayroot=disabled from cmdline

cmdline = re.sub(r' overlayroot=disabled', '', cmdline)

# and use modified cmdline for next boot

check_call(['sudo', 'kexec', '-l', f'/boot/vmlinuz-{kernel}',

'--initrd', f'/boot/initrd.img-{kernel}',

'--command-line', f'"{cmdline}"'])

check_call(['sudo', 'systemctl', 'kexec'])

There are steps in place if a script was submitted to the CI that tried to reboot the system to be outside the overlay. By itself, rebooting the systems will always reboot the systems back into the overlay. To completely disable the overlay, there are two steps. One: update the cmdline to disable the overlay. Two: update the “overlayroot.conf”. The only outside point of entry is from Jenkins, but once the first step is performed, Jenkins will not be able to start the agent to allow any outside connections.

If a public CI requires bare metal to perform testing, where VMs with passthrough is not a preferred solution, then utilizing overlayfs can prove to be a beneficial step towards securing against unintended persistence.